Tech Freedom Series

Owning your data is not just having control over it. It means you don’t have to share it with anyone you don’t want to, including large corporations. Yet, you should be able to share it with all your devices. If you add a contact on one device, it should appear on all your devices without depending on the cloud or a login to Big Tech that can be revoked without prior notice.

You don’t even need Internet to share your data with your devices. As long as you have wi-fi, your devices can talk to each other, passing your new calendar event to them all.

All the mobile apps you need to install for this are available on F-Droid. Here is the overview to help you get started with F-Droid if you have not installed it, yet. Because of this, this works on any Android phone, including phones such as LineageOS.

Syncthing

The first app you want to install is syncthing. Install this on all your devices, including your desktop and laptop computers. This is the backbone that can do many things to restore control over your private life.

The way it works is it can synchronize a folder and its files among all your devices using your wi-fi. When a file changes on one device, the update is propagated to all your devices that folder is shared with. You decide which device can have access to which folder, and which device can write or just read. One common use is you can use it to send all the photos and videos you take on your mobile devices to your regular computer.

In this guide, it will be used to propagate changes to your contacts, calendar and tasks.

Material Files (optional)

While technically optional, this app available on F-Droid is very handy for custom configuring Syncthing. If you are OK storing the data on your internal storage and aren’t concerned with neatly organizing all your syncthing folders, then you don’t need this app. Syncthing will recommend and create a folder for you. Fortunately, this type of data takes up very little space, so won’t dent your internal storage and only requires one syncthing folder shared by contacts, tasks and calendar.

However, if you want it on your external SD card or want to organize your syncthing folders, Material Files has a Copy Path option you can use to copy the folder path you create so you can paste it into Syncthing. Syncthing does not have the ability to navigate your file system to find the folder, so you have to paste the entire path if you want to override the default. This path can be very ugly if on an SD card and virtually impossible to guess from the usual Files apps.

Contacts

You’ll continue to use the contacts app that came with your Android phone. Because contacts is core in Android, it integrates well with other apps that need it, such as your email apps to help you fill in the email addresses of people you are composing email to or the phone numbers of people you call.

On the desktop, on Linux, I recommend Evolution. If on a non-Linux desktop or laptop, you’ll have to find something similar that can do the same things. I can only vouch for Evolution Mail and Calendar app. I’d consider trying Thunderbird next if not on Linux, but can’t vouch it has the options needed to integrate.

Because Evolution handles contacts, calendar and tasks, in addition to its core feature, e-mail, I’ll just focus on Android in the next parts then come back in the end on how to tie the data to Evolution.

Calendar

This is likely to work with the one on your Android device. Go in your Calendar app and click on the About. If it says “The Etar Project” you are golden. If not, then it may or may not integrate with it. Regardless, you can always install the Calendar from The Etar Project via F-Droid if you don’t have a Calendar app or the one you have doesn’t integrate correctly.

Tasks

There are two apps on F-Droid that work well, OpenTasks and Tasks.org.

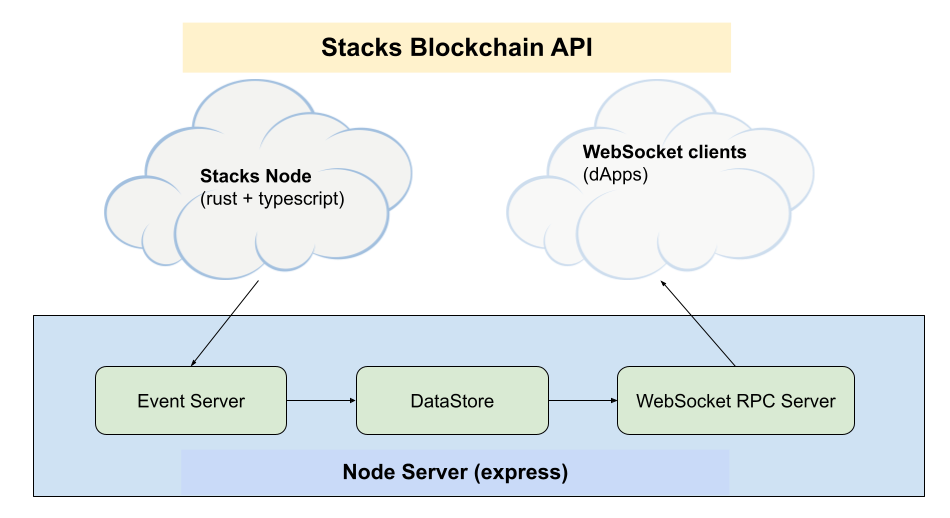

DecSync CC

This app, also on F-Droid, works with Syncthing to replicate your contacts, tasks and calendar events to all your devices. You’ll also need to run it on your PCs. Their GitHub page has links to the various options. They do support Thunderbird, which you’ll want to try if you are not on Linux. Thunderbird works on all major operating systems.

Note that on Linux, neither Syncthing nor DecSync CC start automatically. You either have to configure it to start on boot, when you log in, or launch it each time you boot your PC. If it is not running, your data will not update between Evolution/Thunderbird and your Android devices, though your Android devices can still update each other as this can start up on Android automatically. Both Syncthing and DecSync CC leave it up to you to find a way to automatically start it, or remember to start it when you boot up and log in. I’ll try to update one day how best to do this on Linux.

Configuring Syncthing

Now that you installed all the necessary components, begin by learning how to get Syncthing to find all your devices. Each device has an ID that you can show with a 2D bar code. If other devices can read bar codes, you can add them via the Devices tab using this. Ultimately, this is just a bar code to a long string uniquely identifying the device. If you cannot add devices using bar code scanning, just copy this ID and use whatever means you have to get it to your other device, and just paste it in to add the device. Do this until all your devices are added to all your devices. After that they’ll have no problem finding each other on wi-fi.

You’ll see an option to make a device an Introducer. Pick only one device to make your introducer and set that on for that device on all your other devices. Ideally, this might be a PC you have on all the time. This isn’t necessary, but can make it easier to configure. I definitely don’t recommend more than one introducer in your cluster of devices.

Next, add a folder to your main device. You can call it “dec” for purposes of propagating your contacts, tasks and calendar. This is where DecSync CC will put all the files necessary for your devices to share this data. For that folder, in the Share tab, share it with all your devices. Do not enter a “trusted” PIN. You don’t need that as these are devices you trust, and will only complicate things. That can be useful, however, for sharing folders with friends online as an extra security measure, but not needed when you are able to verify these are your devices.

Configuring DecSync CC

On each device, use the menu to add your “dec” folder to DecSync CC on each device. It will now put your data in that folder, and syncthing will replicate it to all your devices.

Under Settings, set the Task app to point to the app you chose to use for Tasks.

I can’t remember how I set it up, and will update this post the next time I add a device, but you want to have one collection under Address books, one under Calendars, and one under Task list, and they all need to be checked. You can give them any name, such as Business or Personal, as you might have collections you keep separate for various reasons.

I believe that after you add to one device, once syncthing replicates they will show up on the other devices. But, you’ll need to check the checkbox under each category for it to integrate it with your device.

That’s it for the Android part. If you add a contact, task or calendar event, they should show up, typically within a few minutes, on the other devices.

Configuring Evolution

Despite the Evolution plug-in, I ended up using Radicale DecSync, the option recommended for Thunderbird. If you don’t have Python 3 installed, you’ll need to install it in order to install Radicale. Follow their instructions on their GitHub page.

Per their instructions, you’ll create a file ~/.config/radicale/config and copy the contents from the page into that file. Change the decsync_dir setting to point to the folder you added to syncthing on this PC for this.

I recommend creating an executable script in a folder in your PATH that runs the command so it is easier to launch next time. You can put this in a text file called radicale:

python3 -m radicale –config “~/.config/radicale/config”

Give it execution permissions. Put the file in a folder in your PATH. And now you can just run radicale to launch it once you log in. To run it in the background, just use radicale & from your terminal, and leave that terminal tab open.

You can verify it is running by opening this URL in your browser

http://localhost:5232/

Once you log in (with any username or password), you’ll see a URL for each of your collections. To add the tasks URL to Evolution, copy it. In Evolution, do New Tasklist. Select CalDEV as the type. Give it a name. I recommend adding something like ” (dec)” to the name so it is clear that this is coming from DecSync. Paste the URL in the URL field. Enter anything in the user field. Hit OK. Your tasks should now show up if you have any, and you can add tasks from Evolution.

Do the same with New Calendar, only now copy the Calendar URL from the Radicale web page and paste that in the Evolution dialog.

Do the same with New Address Book. Select CardDEV as the type. Give it a name. Copy the addressbook URL from Radicale and paste into the Evolution dialog. Put in any user name.

You should now be able to view these items in the Contacts, Calendar and Tasks views of Evolution. Be sure they are all checked to enable them.

When you write an email, you can now use your Dec contacts. When you create a calendar event, you can select your Dec calendar to create it in. Ditto for Tasks. You should see items created on mobile devices, and they should see items you create, and any device should be able to edit or delete the items.